As Paystack grew from 10 engineers at the start of 2019 to 29 in the summer of 2020, our deployment process became increasingly inefficient, unable to handle a sharp rise in daily deployments. We found ourselves reliant on an out-of-sync dev branch, with engineers merging their changes multiple times, resolving merge conflicts, and sometimes waiting as long as 20 minutes for deployments to complete. At best, it was frustrating; at worst, it was a serious drain on productivity.

The solution, we decided, was to give engineers environments in which they could test their changes as needed, enabling them to be more productive, deploy code faster, and make as many configuration changes as they wanted in a replica of the production environment. Here, we’ll share how we leveraged Kubernetes to create these on-demand environments in a bid to reduce deployment time and improve developer efficiency.

The problem

Until June 2020, we had a simple deployment setup for our public API repository: The master branch deployed to the production environment, and the dev branch deployed to the staging environment. At Paystack, every new feature must be extensively tested in staging, so every engineer has to merge their changes into the dev branch before they can merge and deploy to production.

This system worked fine for the better part of three years, mostly because only a handful of engineers worked on each repository at a time. But as teams grew and multiplied, engineers began to experience frequent merge conflicts and deployment failures. The dev branch quickly fell out of sync with the master, and we could no longer tell whether a feature that worked in staging would work in production. With the number of engineers on our team expected to double by the end of 2020, we knew we had to make a change.

The solution

To ease this bottleneck, we determined that developers needed isolated environments that could serve as individual staging environments—a workspace where they could make changes without worrying about breaking things.

At first, we considered creating complete environments on developers’ laptops using either Docker Compose or Minikube, which would allow them to build and test features in isolation. A notable drawback, however, was the strain it would put on developers’ machines. Additionally, engineers would need to have 17 different applications cross-communicate. Grasping the context of applications spread across nine teams, as well as how every part of the architecture interacts, is not for the faint of heart—it would be time-consuming, and debugging would be a nightmare, requiring engineers to understand if an error was due to a downstream misconfiguration or a code push on a current working artifact.

Instead, we decided to create these on-demand environments in the cloud, in Kubernetes. This would abstract the need for computing resources and offer an elastic platform that could encapsulate application templates and inject variables such as DNS and environment configuration on the fly, eliminating the need for that additional context.

Summiting the learning curve

To give the transition to this new workflow its best shot at success, we knew we’d need total buy-in from our engineers. A steep learning curve can be a major deterrent to adopting any new implementation, no matter how glorious, so as we rolled out Kubernetes as the foundation of our elastic, on-demand staging environments, we were determined to make that curve surmountable.

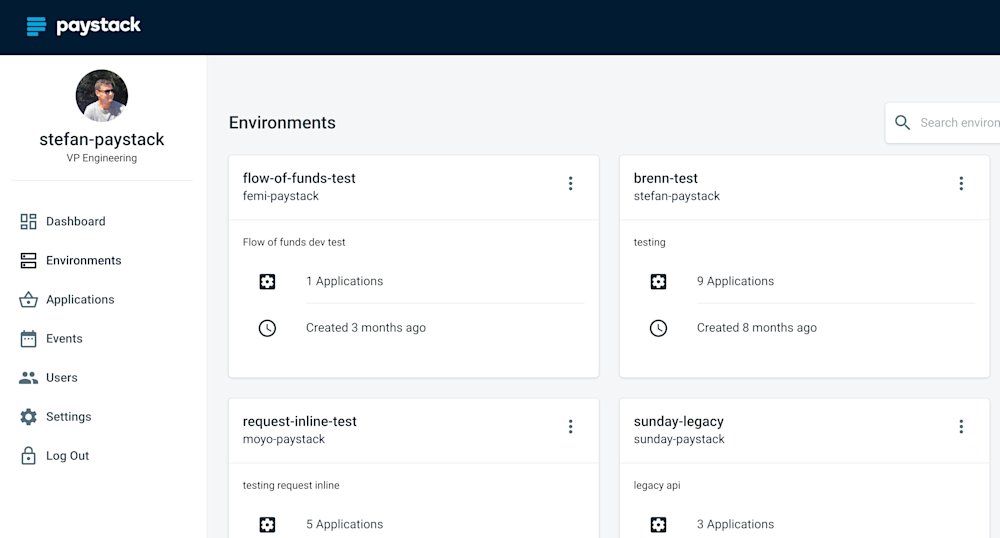

Kubernetes isn’t the easiest to learn, especially if containerization isn’t already a part of your engineering culture. When we started this journey, none of our applications were cloud-ready, nor did they adhere to the Twelve-Factor App methodology, which we’d decided to adopt to ensure portability and durability. Like so many engineering problems, we felt we could address the complexity with abstraction and interfaces. We built an abstraction layer on top of Kubernetes to give developers a visual interface that allowed them to spin up new environments and select the applications they wanted to run, saving them time setting up workstations and freeing them up to focus on the task at hand.

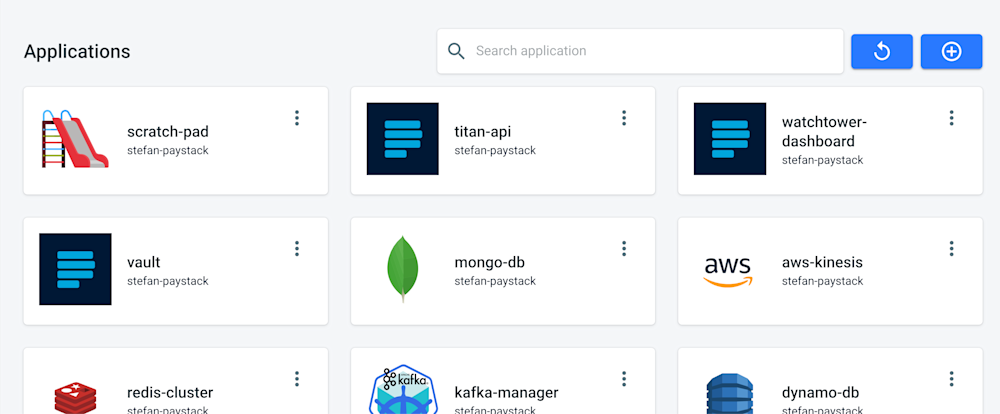

Ease of use would be essential to full-team adoption, so simplicity reigned in our design optimizations: We built an à la carte dashboard that presents the user with a menu of services to choose from, not unlike browsing an e-commerce site, and pared back any nonessentials that had the potential to overwhelm the user, like configuration logs and application stats.

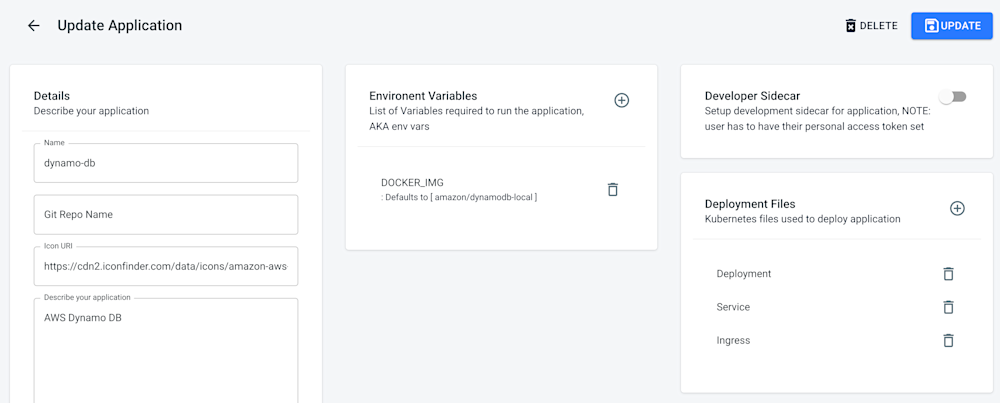

The interface also simplifies the process of onboarding applications to Kubernetes. Typically, this requires myriad resources employed in concert—a deployment manifest, a service manifest, an ingress manifest, and more—but we were able to replace all that with a few clicks to the interface. Behind the scenes, we created predefined templates to generate manifest files, which then get applied to the cluster. Unique public URLs are generated on our private DNS and routing configuration injected to the Nginx service, which enables one-click deployment and cross-team collaboration, especially on frontend interfaces.

We were also able to create environment separation using Kubernetes’ namespace functionality to avoid routing issues. Kubernetes namespaces offer logical separation on the cluster with the ability to cross-communicate, which is handy for organizing your cluster into virtual subclusters.

Building for power users

All this encapsulation was designed to benefit developers at every Kubernetes comfort level, but we also wanted to make sure we were catering to power users by providing kubeconfig files on demand and making it possible to add new templates. We used GitHub Action templates for our CI/CD, which required just one or two small changes to notify the abstraction layer of a new build and would auto-deploy from a preferred branch based on the user’s environment config.

Giving developers isolated environments in which to experiment without worrying about affecting their teammates’ work also applies to infrastructure components like databases, message brokers, and caching layers, so we made these available on the application menu as well. In cases where we lacked a predefined template, our power user tooling made it easy to add a new item to the menu, as long as it’s packaged as a container image and the images are freely available in a Docker registry.

Never stop improving

The platform’s rollout one month later, in July 2020, was received with much internal excitement and fanfare. There were clear and immediate benefits over our old workflow: Engineers were finally able to test assumptions, debug, and experiment without affecting other teams.

There were obstacles, too: Having more developer environments increased our cloud bill by around 5 percent, but the boost to developer productivity and confidence was worth it—plus, we were able to bring costs down by about 30 percent of the increase by using AWS spot instances. We also noticed we’d missed a few features. For example, to keep the interface simple, we hadn’t exposed the logs from the underlying pods, but engineers needed them to debug problems with their applications, so we added them into the next release. With a couple of design tweaks, we were able to present the logs on the interface without adding too much clutter.

We also observed a common—and far from optimal—workflow: A developer would make changes on a local editor, check the code changes into Git, deploy to the platform, test the changes, notice a few things weren’t quite right, return to the local editor, and do it all over again. Too many steps just to test code in a test environment!

To remedy this, we decided to take the editor to the cloud. We created Docker containers running the Visual Studio Code code-server IDE, which allows developers to use Git commands to pull and push code. They can then live code inside the environment they’ve created. By enabling a “hot reload” on the codebase—essentially, restarting the application as soon as a file is changed, allowing them to test without going through the build and deploy cycle—they can commit directly from the test environment and make the code changes permanent. The abstraction layer continues to manage everything else—DNS, routing, logging, configuration, restarting applications—behind the scenes.

What we’ve learned

Before we rolled out the platform, we set a few benchmarks to measure success. First was ease of use: The developer environments had to be easy for engineers to learn and employ, which is what motivated us to create an intuitive and easy-to-configure graphical user interface. Within a month after the rollout, engineers were able to spin up environments and play around in them without any hand-holding—so much so that we now ask them to destroy environments once they’re done with them to keep resource costs down.

Second was adoption: We knew it would take a while, but we wanted all engineers to adopt the new workflow by January 2021. We scheduled workshops with engineering teams so they could explore the platform, which helped drive adoption. As of March 2021, all developers at Paystack are using these environments to experiment and test assumptions, and they’ve even started using them to showcase proofs-of-concept for new features.

Last was developer efficiency: We wanted to make deployment seamless, enabling engineers to focus more time and energy on the hard problems. Though efficiency is difficult to quantify, conversations with developers suggest the platform has helped reduce deployment failures, merge conflicts, and friction between development, test environments, and production.

Building this platform helped our developers feel more confident in the code, commit, and test cycle and reduced frustration and toil, especially among new engineers struggling to get their test environments just right. In the process, we’ve learned that with the right abstractions, it’s possible to simplify something as complicated as Kubernetes and enable engineers to extract value without needing to understand the minutiae of the platform. As an organization, we’re now more familiar with containerization and other cloud native best practices—and as our team continues to grow, we intend to harness that knowledge to build platforms that increase developer velocity and foster creativity, and further our mission of building payment tools that power the next generation.