In August 2019, I delivered my latest game to a group of generous people who’d backed the Kickstarter campaign for the Wonderville arcade bar in Brooklyn, New York. The game was Restricted Airspace: a lo-fi shooter in the style of the original Star Fox, set in a world modeled on my native Beirut in the 1890s.

I’ve been making video games for almost a decade, both as a freelancer and as an independent developer. My usual tool of choice is Unity, one of the two dominant game engines in widespread use today. Unity provides a cross-platform C# runtime for game logic, a powerful authoring environment to lay out scenes and levels, an asset pipeline that supports every major art package, and a vast ecosystem of tutorials and packages. If you’re looking to get into game development, you could do a lot worse than this engine.

I like Unity a lot—in many ways, I’ve built my career on it—but I wanted to try something different for Restricted Airspace. I’m generally interested in game development tooling and workflows, and I’d been playing with various open-source, mostly web-based technologies to that end for a few years. I had rough prototypes of game-engine components here and there, and I decided to try to cobble them together for this project, mostly to see how far I could get. I fully expected to hit a roadblock and have to start over in Unity, but it never happened. Things just kept working, and working well. For better or worse, the stack of technology built for the web—a platform originally designed to share formatted text documents!—provided just about all the functionality I needed to build a powerful, expressive, and fun game development experience.

I want to share parts of that journey here, focusing on the aspects of the pipeline I didn’t expect to be able to bring over to JavaScript because of my outdated assumptions about the web frontend as a platform. I hope you’ll find it illuminating, both as a peek into the game development process and as an exploration of the power of modern web technologies.

Overall, Restricted Airspace was developed and deployed using Electron. I built most of the engine myself, with core parts written in TypeScript and game logic written in JavaScript. The 3D art was modeled in Blender, which I also used as the level editor. I’ve been calling the engine I made for this game Ajeeb, and I’m gradually releasing it online as I clean up and document its modules. The game itself will be exclusive to backers of the Wonderville Kickstarter campaign until August 2020; since I can’t yet share a public version of the game or its source code, this article includes only code snippets and screenshots.

Graphics: Three.js and WebGL

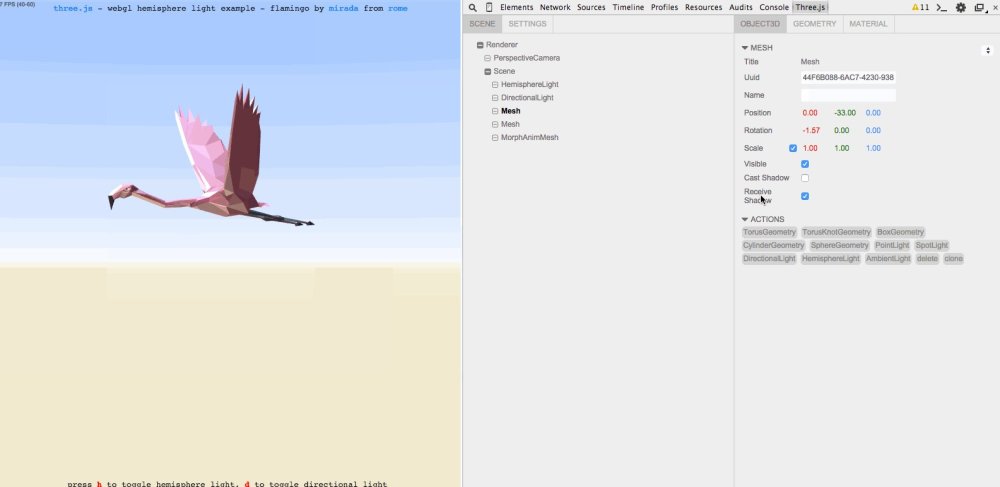

Restricted Airspace is a 3D game, and I used the excellent Three.js as my graphics library. It offers many conveniences and conventional semantics over the lower-level WebGL API. “Conveniences” may be too soft a word here—Three.js provides model loading, a materials system, a scene graph, cameras, a post-processing system, an animation solution, and handles audio. I exported models from Blender in the glTF format and loaded them without issue.

The APIs are designed for ergonomics and ease of use. Manipulating the scene is as simple as reading from and writing to a deeply nested JavaScript object. I was even able to embed game-specific data directly into the scene from Blender using custom properties which Three.js exposed via the userData property. This meant that in addition to position, rotation, and scale, objects could have properties like “health,” “enemy type,” and “point value.” The combination of geometric and semantic data allowed me to really use Blender as a level editor.

I will admit, the lack of operator overloading made linear algebra more tedious than I was accustomed to in C#, and my functional programmer brain still gasps at all the mutability, but overall, JavaScript and Three.js gave me what I needed to finish my game and have fun doing it.

Three.js is capable of physically accurate rendering, but for Restricted Airspace I opted for a lo-fi look. I achieved this using a full-screen shader and other tricks built on Three.js’s EffectComposer. (That process is documented in detail on my blog.)

Input: The Gamepad API

It’s not news that you can capture keyboard and mouse input in a browser, but I was surprised to learn that you can also access connected gamepads. Most modern video game consoles that have wired gamepads use USB as their physical layer and conform to a standard joystick protocol. The Gamepad API is a web standard that communicates with these devices—and, to my astonishment, it Just Works! That is, for modern browsers on major operating systems. (I tested it with wired Microsoft Xbox 360 controllers on all three major operating systems without issue.) If you have such a device handy, you can test it in your browser right now at the HTML5 Gamepad Tester. This API also works in Electron, and Restricted Airspace takes advantage of this to work with both a keyboard and a gamepad if one is available, which allows it to replicate the functionality of a standard browser.

Coroutines: ES6 Generators

Unity’s most underrated feature, in my opinion, is its coroutine implementation. Coroutines are subtle: They allow an otherwise normal function to evaluate across multiple frames of a game rather than all at once. Using the yield statement, a coroutine can be made to wait for a single frame, for a number of seconds, or even for another coroutine to complete. This comes up all over the place in my Unity development work. I’ve used coroutines to make procedural animations, state machines, input systems, even the high-level governing logic for entire games.

As a language, C# doesn’t have coroutines per se, but it does have iterators, and Unity used them to cleverly hack in a coroutine implementation. A StartCoroutine method is available that takes a C# iterator and schedules it for execution in the engine. Once a frame, Unity will run every scheduled coroutine up to the next yield statement and remove any that complete or return. The result is code that reads sequentially but runs across multiple frames. Here’s how you might fade out an object across 10 frames:

// from https://docs.unity3d.com/Manual/Coroutines.html

IEnumerator Fade()

{

// ft starts at 1 and decreases by 0.1 to 0

for (float ft = 1f; ft >= 0; ft -= 0.1f)

{

// set the alpha of the renderer to ft

Color c = renderer.material.color;

c.a = ft;

renderer.material.color = c;

// wait one frame before the next loop cycle

// the result is that each iteration of this

// for loop will evaluate in subsequent frames

yield return null;

}

}JavaScript also lacks coroutines, but it does have generator functions and a yield statement. They operate enough like C#’s that I was able to build my own coroutine implementation. Here’s how I flash an “ouch” material when the player is damaged:

// inside the player damage function

health.value--;

if (health.value <= 0) {

// destroy player

} else {

// flash ouch material for two frames

coro.start(function* () {

asset._mesh.material = ouchMaterial

// wait for two frames before evaluating the last line

yield coro.waitFrames(2)

asset._mesh.material = asset._mesh.originalMaterial

})

}The full implications of this approach merit an article of their own, but I use coroutines for everything in Restricted Airspace. The top-level game loop, the input system, the enemy AI—just about anything that interacts with time is implemented using coroutines. I don’t think I could make games without them.

Were I not able to reproduce this functionality, I would probably have packed things up and returned to Unity. But modern JavaScript runtimes have come far enough that I can keep even this very specific language-adjacent feature when working on the web stack.

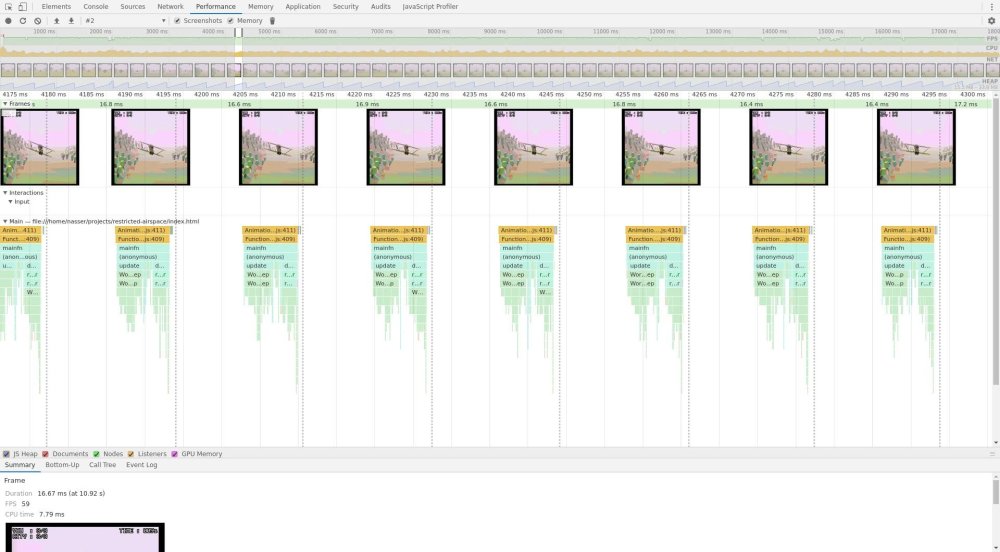

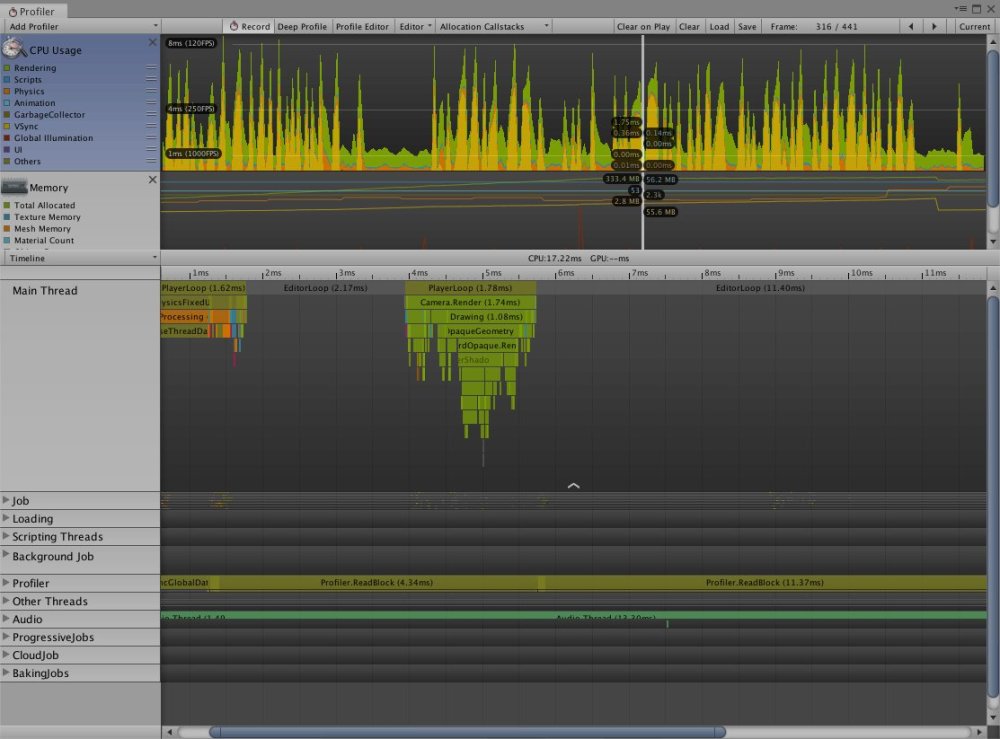

Tooling: Chrome DevTools

A major argument against building a new game framework is that even if you manage to get the player-facing parts together, you still won’t have good developer tooling for a long time, if ever. Profilers and debuggers are incredibly hard to make and take many person-years to get right. To make things worse, game development generally requires specialized tooling because of how different it is from other kinds of software development. Off-the-shelf profilers, for example, have no notion of frames, which are central to game performance tuning. That’s why industrial game engines tend to provide their own built-in game-specific tooling.

Fortunately, games have a big enough overlap with web apps that a lot of the Chrome DevTools built into Electron are directly usable. In particular, Electron’s frame-based profiler made it straightforward to track down performance issues in Restricted Airspace. Under the performance tab, you can record a “profile” for a portion of the game experiencing performance issues. You can then navigate that profile to inspect each frame individually. DevTools gives you complete call stacks with the time spent on every function, memory allocations, screenshots, and more.

Similarly, Unity provides a frame-centric profiler, which plays a crucial role in the engine’s development experience. Without this kind of tooling, making a high-performance game is nearly impossible. To my surprise, I found the Electron profiler just as good as Unity’s.

One way that game development is a lot like any other kind of software development is the amount of time you spend squashing bugs. To that end, a high-quality stepping debugger is a godsend. Being able to pause the game at a breakpoint and inspect values means you can make fixes in minutes instead of hours.

Electron’s Chrome DevTools provides a stepping debugger in the same window as the running game. It’s super useful to be able to pop in and check under the hood when needed. Unity doesn’t provide anything like this, but it does provide IDE-integrated remote debugging so that you can place breakpoints in your IDE and enter a debugger when they’re hit. Electron also supports this, though I didn’t end up using it during the development of Restricted Airspace.

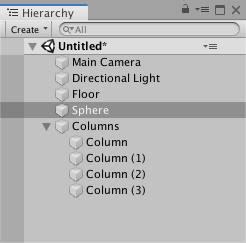

Finally, Electron supports Chrome DevTools Extensions, meaning you can write new inspectors. This is exciting because it means you’re not restricted to the tooling written for frontend developers. I used a Three.js-specific inspector toward the end of the development of Restricted Airspace, and being able to see the scene graph at a glance reminded me of the Unity hierarchy inspector.

A felicitous feature creep

The combination of Electron as an authoring environment and a Three.js-based ES6 runtime resulted in a surprisingly complete and enjoyable game development experience. There are limitations to this approach, for sure, but I’ve already started fleshing out the resulting engine, and I hope to use it more and more in my personal practice. In what may be the most egregious (and felicitous) case of feature creep of all time, this platform originally intended to share research papers—the web—has grown to enable one of my favorite game development experiences to date.