It’s 10 a.m. on a Monday morning. After a cup of coffee and a quick scan through your emails, you’re ready to start the week with a new greenfield project. In this universe, you’re a tech lead, and your job is to help your team deliver this project. What are you going to do?

Usually, you’d create an MVP (minimum viable product) as quickly as possible in order to gather data directly from your users. You’d design an architecture and choose a database, either SQL or NoSQL, depending on your product needs. Perhaps you’d then create a monolith for the API layer to minimize the overhead of multiple CI/CD pipelines, and you’d configure the recommended observability tools. You’d focus on the business logic of the application and, last but not least, create a client-side application, probably using a well-known framework like Vue, Angular, or React. If you’re lucky, after a few months, you’ll have a product up and running and many users subscribing to the service.

Let’s say this project is indeed successful, and the company decides to invest in it further. To support this work, you’ll have to hire more people. Suddenly, you find yourself with way more developers than you started with. Looking at the API dashboards, you also realize that some APIs are being consumed in entirely different ways than you’d originally planned. Some are highly cacheable, so the CDN is taking the main hit; others are directly hitting your backend thousands of times per second.

Scaling the entire monolith horizontally won’t fly in this universe. (To do so would risk scaling all of the APIs instead of just the ones you truly need, which could lead to problems like throughput bottlenecks or difficulties maintaining the codebase across such a large team.) So what are you going to do?

A microservices architecture—which allows you to work independently, deploy autonomously, scale individual services, and pick the right technical solutions for the job—is a well-known and widely adopted solution for this problem. We’re getting somewhere. But what’s happening on the frontend?

For many projects, the frontend remains as is: a single-page application (SPA) and/or a server-side rendering application that just grows and grows with every new feature added to the platform. In this scenario, distributed teams are probably working on the same codebase, inheriting decisions made by the handful of people who started the project in a completely different context. But frontend teams face the same organizational and scalability challenges as backend teams. Shouldn’t they—shouldn’t you—have other options?

You could continue on the project’s current trajectory, refactoring where needed and trying to reduce the communication overhead across distributed teams. Or you could try something different. You could apply the principles that microservices leverage on the backend to the frontend, enhancing collaboration and offering developers more freedom to innovate and responsibility for their choices. This solution is called micro-frontends. Let’s see how they work.

Some aspects of micro-frontends

Modeling micro-frontends around business domains

Modeling micro-frontends by following domain-driven design (DDD) principles is both possible and valuable for structuring a scalable organization and creating effective, autonomous teams. While DDD traditionally provides clear direction for managing backend projects, some of those practices may serve the frontend, too. Investing time at the beginning of a project to identify your business domains and decide how to divide the application will help you shape your teams, delineate the boundaries of your project’s domains, and create a ubiquitous language between teams.

A culture of automation

Much like with microservices architecture, your organization can’t afford to have a poor automation culture when it comes to using micro-frontends. You’ll need to make sure your CI and CD pipelines are optimized for a fast feedback loop to embrace this strategy. Getting automation right will result in a smoother adoption of micro-frontends by engineering teams, and will allow them to focus more on the business logic of the platform than on the operations.

Hiding implementation details

Hiding implementation details and working with API contracts is another essential technique for working with micro-frontends. In particular, when there are parts of an application that need to share information with one another, like components or multiple micro-frontends that exist on the same page, defining exactly how those elements communicate, especially if they were developed by different teams, is essential.

Developers should define an API contract up front and respect it throughout the development process, especially if it’s spread across multiple teams. This will simplify integration when all elements are ready, while also permitting each team to change their own implementation details without affecting the work of other teams.

Decentralization

Micro-frontends can also shift some decision-making from tech leads to individual development teams working in a particular business domain. These teams are often best positioned to make certain technical decisions, such as what design patterns to apply, which tools to use, or simply how to define the project’s structure. But that doesn’t mean teams working on projects that use micro-frontends should all proceed without a North Star. Leadership, such as architects or principal engineers, should provide clear guardrails so that teams can make responsible and well-aligned choices—and so that everyone knows when and how to operate without having to wait for a centralized decision.

Independent deployments

Micro-frontends also allow you to deploy independent artifacts. Imagine being able to deploy at your own speed, without having to coordinate with multiple teams and without facing down external dependencies—imagine the benefit to efficiency and innovation.

Isolating failure

Isolating failure isn’t a huge problem in single-page applications because of their architecture. With micro-frontends, however, it may be more challenging. Often, micro-frontends require lazy loading part of the application or composing a view at runtime. In both cases, you can end up with errors due to network failures or 404s. Consider providing alternative content or hiding a specific part of the application to improve the user experience when errors occur.

The micro-frontends decisions framework

How can you apply a micro-frontends architecture to a new or existing project? Your approach will depend on your operational context.

You’ll need to make some architectural decisions up front to shape your design. These include how to define a micro-frontend, how to orchestrate the different views, and how to compose the final view for the user. Let’s review them here.

Defining micro-frontends

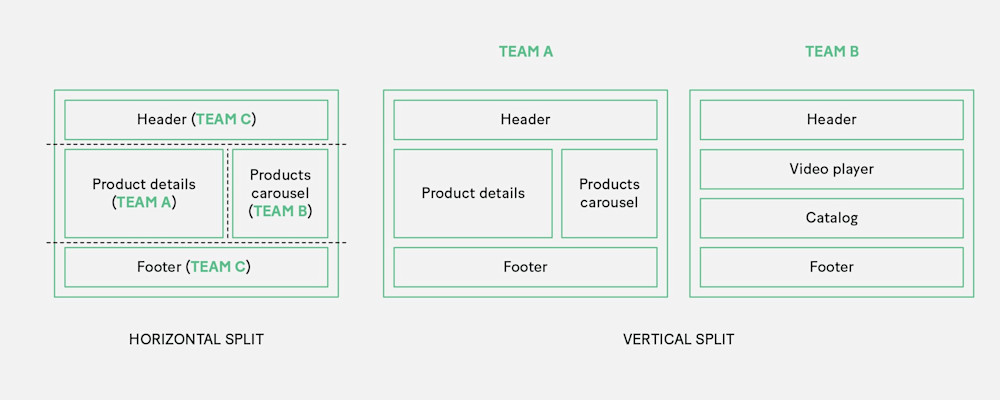

You can define micro-frontends in two ways: horizontal, which allows multiple micro-frontends per page; and vertical, which allows one micro-frontend per business domain.

Opting for a horizontal design means you’ll split a view into multiple parts, which may or may not be owned by the same team. The challenge is to ensure that these different parts have a cohesive look and feel. Bear in mind that with this approach, we’re breaking one of the first principles we’ve defined for micro-frontends: model around a business domain. (Though I’d also say it’s hard to consider a footer a business domain!)

Opting for vertical splitting means you’ll look at the same problem from a business rather than a technical point of view. You’ll assign each business domain, such as authentication UI or the catalog UI, to a team. This enables each team to become domain experts—which, as I noted earlier, is especially valuable when teams have more agency to employ that expertise. In fact, each domain team, despite how many views the domain is composed of, can operate with full autonomy.

Of the two, vertical splitting is closest to the traditional SPA or server-side rendering approach. It doesn’t heavily fragment the implementation, and each team truly owns a specific area of the application.

Composing micro-frontends

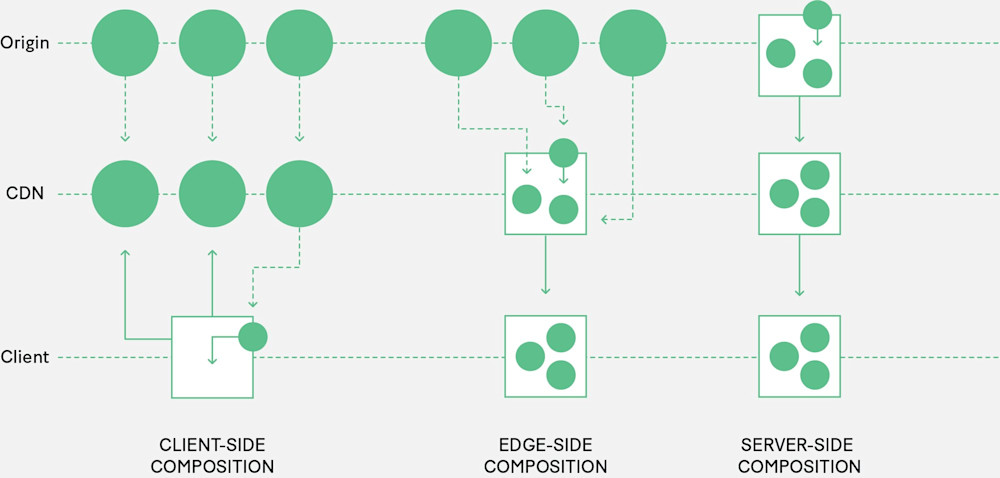

There are three ways to compose a micro-frontends architecture:

Client-side composition

Edge-side composition

Server-side composition

Deciding which approach fits the project is nontrivial, and I truly believe there is no right or wrong answer. The context and parameters of your work should lead you to the right solution.

Client-side composition

In this architecture pattern, an application shell loads multiple micro-frontends directly from a CDN (or from the origin if the micro-frontend is not yet cached at the CDN level), often with JavaScript or an HTML file as the micro-frontends’ entry point. This way, the application shell can dynamically append the DOM nodes (in the case of an HTML file) or initialize the JavaScript application (if the entry point is a JavaScript file).

It’s also possible to use a combination of iframes to load different micro-frontends. Otherwise, we could use a transclusion mechanism on the client side via a technique called client-side include, which involves lazy loading components inside a container using a placeholder tag and parsing all the placeholders to replace them with the corresponding component. Client-side composition works well for both vertical and horizontal splitting, and is particularly useful when you have highly skilled frontend teams who may be less keen on or less experienced with full-stack solutions. I suggest using this pattern in environments in which you have control over the final targets: desktop applications, enterprise applications, or web platforms with a strong client-side component.

Edge-side composition

Edge-side composition assembles the view at the CDN level, retrieving your micro-frontends from the origin and delivering the final result to the client. Many CDN providers allow you to use Edge Side Includes (ESI), an XML-based markup language. ESI was introduced to support the possibility of scaling a web infrastructure by exploiting a large number of points of presence around the world provided by a CDN network. However, ESI is not implemented in the same way within each CDN provider, which complicates its usage. Using a multi-CDN strategy, or simply changing providers, could result in multiple refactors and new logic to implement.

Because transclusion at the CDN level doesn’t make sense with vertical splitting, this approach only works with horizontal splitting. Edge-side composition is most useful on static pages that have to scale, but that don’t require control over every single detail—think news websites and online catalogs like Ikea that combine edge-side includes and client-side includes. The CDN takes care of the scalability aspect of the final solution and will serve the user from the closest point of presence possible.

Server-side composition

With server-side composition, micro-frontends are composed inside a view, cached at the CDN level, and finally served to the client at runtime or at compile time. In the example in the diagram, the origin server composes the view, retrieves the micro-frontends, and assembles the final page. If the page is highly cacheable, it will then be served by the CDN with a long time-to-live (TTL) policy. If the page is personalized for each user, give serious consideration to scalability, as there will be many requests coming from different clients.

Server-side composition is most useful when you want full control of the application. With this approach, remember to delegate caching to the CDN whenever possible, to generate your template server side, to take care of the page composition at runtime, and maybe integrate your web pages with personalized services. You can use either vertical or horizontal splitting, though the latter may make more sense because of its flexibility.

Routing micro-frontends

As with compositions, there are three ways to route your micro-frontends: server-side, client-side, or edge-side.

Server-side routing, in which a web server returns different static assets based on the path requested, is a pretty standard approach. You can also add parameters in a query string that will be used by the client for communicating between micro-frontends. This orchestration technique can be used with either a vertical or horizontal split.

Client-side routing, in which an app shell contains and loads the micro-frontends, is mainly used when you’ve sliced your applications vertically, so that the app shell loads one micro-frontend at a time instead of a multitude at once. If you’re using a horizontal split, you should have a page—a container like the app shell—that contains the different micro-frontends. By changing the URL, you can load a different page with a different view.

Edge-side routing, which leverages the latest features of some CDN providers to run code on the edge that orchestrates and returns the static files using a web server, means you don’t have to deal with scalability problems. However, the code running on the CDN should be quick to execute, which can be a limitation in some cases. At the same time, you can return your static assets more quickly than if you were relying on a standard web server implementation, especially considering that the points of presence are closer to the user request.

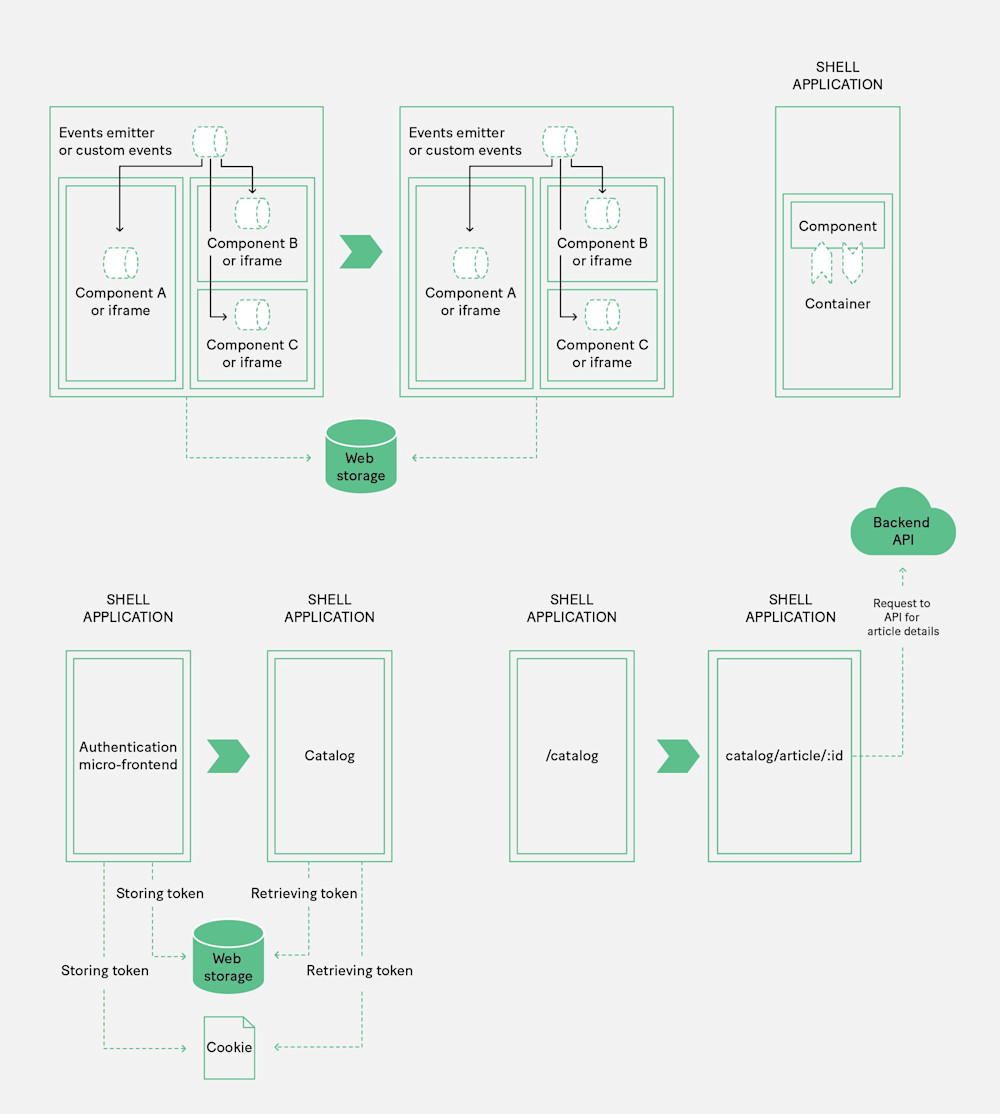

Communication between micro-frontends

If you’ve opted for a horizontal split, your next step is to define how your micro-frontends communicate with each other. One method is to use an event emitter injected into each micro-frontend. This would make each micro-frontend totally unaware of its fellows, and make it possible to deploy them independently. When a micro-frontend emits an event, the other micro-frontends subscribed to that specific event react appropriately.

You can also use custom events. These have to bubble up to the window level in order to be heard by other micro-frontends, which means that all micro-frontends are listening to all events happening within the window object. They also dispatch events directly to the window object, or bubble the event to the window object, in order to communicate.

If you’re working with a vertical split, you’ll need to understand how to share information across micro-frontends. For both horizontal and vertical approaches, think about how views communicate when they change. It’s possible that variables may be passed via query string, or by using the URL to pass a small amount of data (and forcing the new view to retrieve some information from the server). Alternatively, you can use web storage to temporarily (session storage) or permanently (local storage) store the information to be shared with other micro-frontends.

Embrace the micro-world

These are just a few of the considerations and challenges you’ll encounter when implementing micro-frontends. (Others include whether or not to share components, how developed your design system should be, and which approach to use in order to be technology agnostic, if desired.) But these are the key decisions you’ll face when you decide to use a micro-frontends architecture to scale your projects.

While micro-frontends offer the benefits of more independent teams, autonomous deployment, and faster innovation, they’re no silver bullet. Context is key to understanding the trade-offs. Invest in understanding your business needs, ensure you are crystal clear on what you need to build, and then go ahead and embrace the micro-world.