On the surface, the process is straightforward: Site reliability engineers at Yelp sometimes shift traffic to prevent user-facing errors. Under the hood, however, it involves a complex choreography between production systems, infrastructure teams, and hundreds of developers and their services. This is the story of how Yelp’s production engineering and compute infrastructure teams implemented a failover strategy by finding a balance between reliability, performance, and cost efficiency.

What’s a traffic failover?

Yelp serves requests out of two regional AWS data centers located on both U.S. coasts. Read-only requests, which constitute the bulk of user traffic, are sent to the nearest data center, with additional logic to ensure the load is evenly distributed between both regions. Sometimes, one region becomes unhealthy due to a bad infrastructure configuration, an impaired critical data store, or, on rare occasions, an AWS issue. When any of these happen, we could be serving users HTTP 500 errors and need to act quickly.

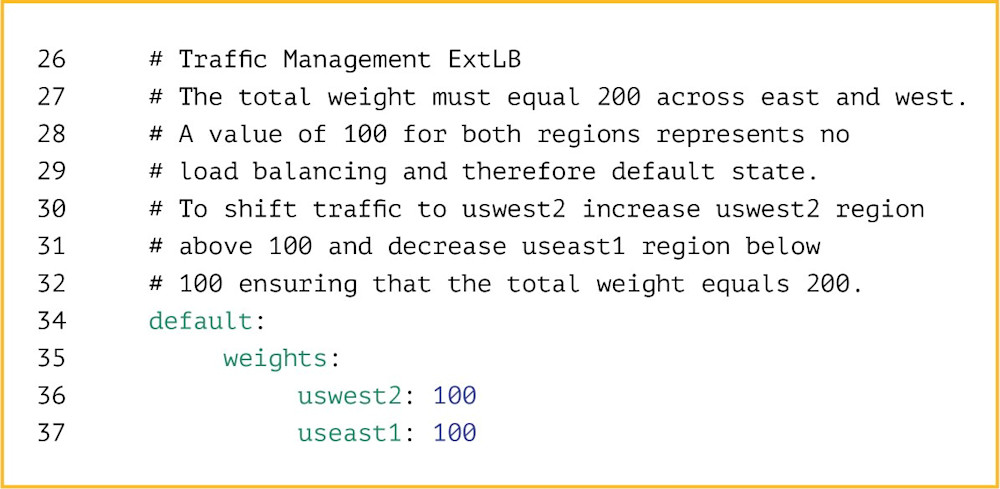

To mitigate such outages, one tool at Yelp’s disposal is the failover: the ability to quickly shift traffic from the unhealthy region to the healthy one. A partial traffic shift alleviates pressure on impaired systems and allows them to recover. The shift can also be total: a full failover. All we need to do to shift traffic is update a Git-controlled YAML file. But even during an emergency, merging and pushing the change requires approvals, typically from the secondary on-call production engineer, a manager, or an engineer involved in the ongoing incident.

On-call engineers at Yelp regularly practice partial and full failovers to ensure our infrastructure can handle the sudden change in load and our teams remain comfortable executing the procedure. While the failover itself is a simple config change, situations that require full failovers are often stressful and unpredictable. The primary on-call engineer needs to be familiar with the process to avoid additional strain.

When failovers fail

Major shifts in traffic patterns can overwhelm the healthy region that’s now serving global traffic. In Yelp’s early days, we “melted” a healthy region on numerous occasions by sending too much traffic to one region too quickly. Most of our services and clusters of machines can scale up in minutes, assuming all systems are working as they should, but these are crucial minutes we can’t spare. Our response needs to be instant.

Furthermore, in a constantly evolving infrastructure, adding capacity to production can be complicated—a recent change in low-level configuration could slow or even prevent us from getting new, healthy machines. This can quickly devolve into a worst-case scenario where we’re unable to scale up the healthy region and end up serving HTTP 500 errors to users.

Keeping double capacity

A good way to prevent meltdowns is to keep extra compute capacity around at all times.

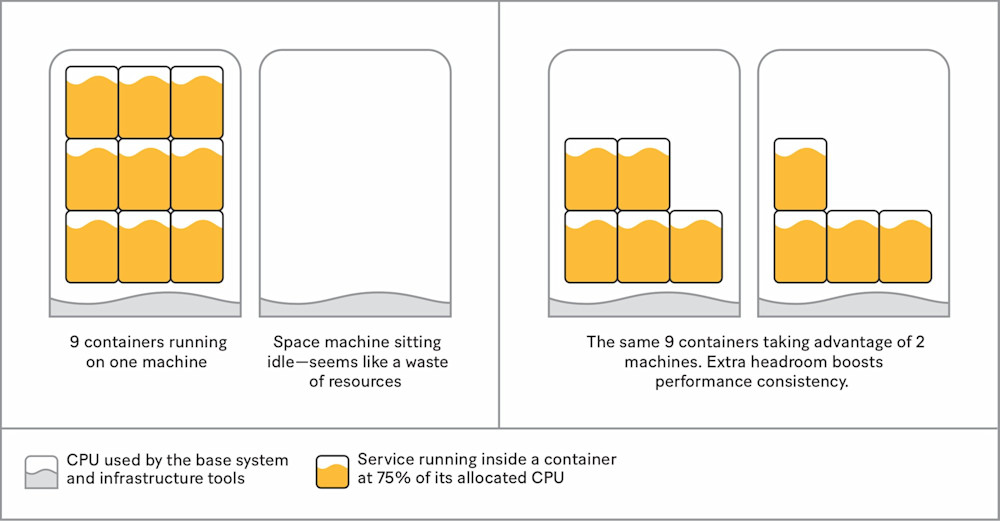

One way to do this is to have more machines available. By doubling the number of running machines, we always have the compute capacity we need to handle failovers. This also means we don’t need to add machines in an emergency, which removes one step in the failover process and, more importantly, reduces dependency on the compute infrastructure team if something goes wrong provisioning these instances.

However, keeping idle machines around just in case of a failover can seem like a waste of resources, so we put them to work by distributing the containers we need among all available machines. This way, each machine has just 50 percent of its resources allocated to services in normal situations, allowing it to absorb load spikes and maintain more consistent performance—and it costs the same.

With enough machines to handle failover conditions, we’ve gained reliability (spreading containers on multiple hosts means a single host failure will impact fewer services) and improved performance consistency. However, we still need to address the critical minutes it takes to schedule more copies of our services during an emergency failover. We need traffic shifts to happen in seconds, not minutes, to minimize the number of 500s we may be serving.

Always be ready to shift traffic

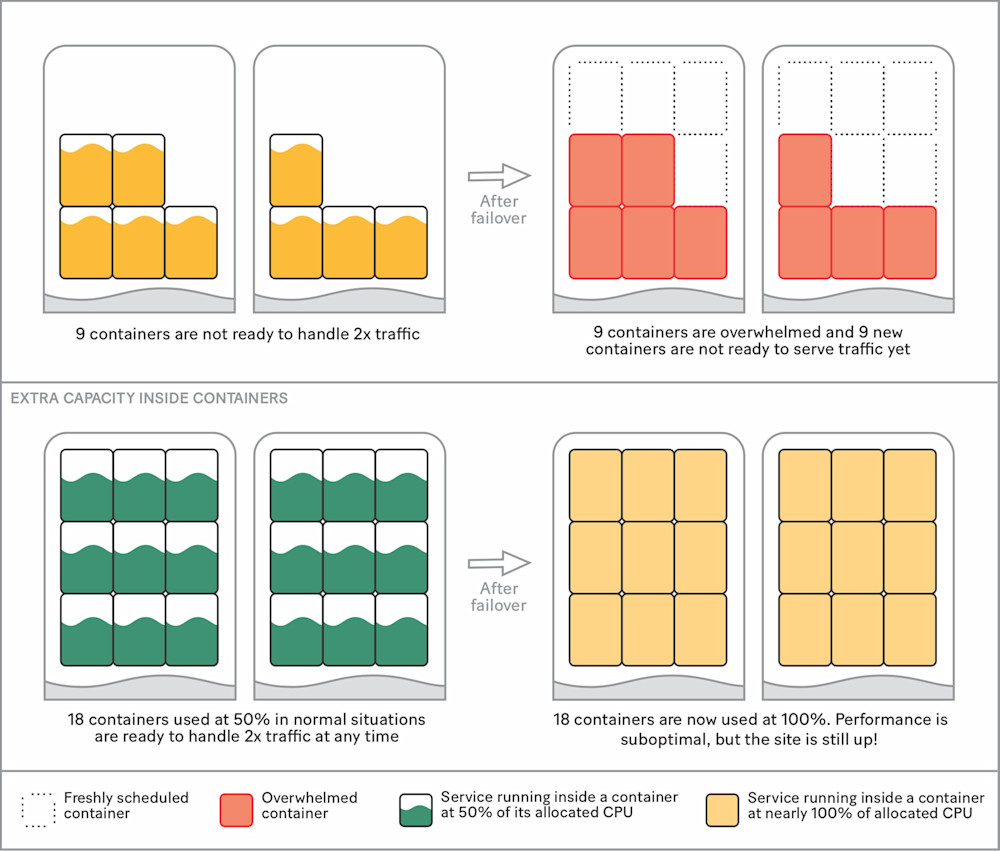

Precious time can be wasted during failovers as we wait for additional containers to be scheduled to handle the new load, download their Docker image, and warm up their workers before finally being able to serve traffic. To shave off those extra minutes, we decided to keep the extra capacity inside the containers as part of the normal service configuration, ensuring we don’t need to add any more during failover. While this may seem like a benign detail, it’s the key to our reliability strategy, since it allows us to always be ready for failover.

Having additional containers in use has a few key advantages:

We can guarantee high performance consistency to users by keeping free resources on all machines. These resources are already reserved by the services.

We already have enough containers to handle a sudden 2x traffic increase.

We’ve cut down our dependency on the compute infrastructure’s ability to schedule containers at a critical time.

In order for this strategy to work, however, containers must be correctly sized and ask for the right amount of resources from the compute platform. In a service-oriented architecture, developers are directly in charge of the configuration of their service. This configuration has to reflect our failover strategy, and every service needs to be configured to use exactly 50 percent of its allocated resources, which is what’s required to handle the doubled load during failover.

Setting the right values

The main advantage of a service-oriented architecture is greater developer velocity. Developers can deploy the services they own many times a day without having to worry about batching code changes, merge conflicts, release schedules, and so on. Teams fully own their services and associated configurations, including resource allocation like CPU, memory, and autoscaling settings.

Resource allocation is a challenge. In order to be failover-ready, every service needs precise resource allocation to ensure containers use exactly 50 percent of their resources in normal situations. How can we expect software engineers across dozens of teams to fiddle with these settings in production until they get it exactly right?

Finding the optimal settings

Some core components of Yelp, like the search infrastructure, have been optimized for performance over time. Their owners know exactly how the application behaves under heavy production load, how many threads they can use, what the typical wait versus actual CPU time is, how frequent and expensive garbage collection operations are, and so on. However, most teams at Yelp don’t have all this knowledge. Most services work with the default values, and the only “tuning” we need to do consists of bumping up a few resources here and there as the service grows in popularity and complexity.

Abstracting resource declaration

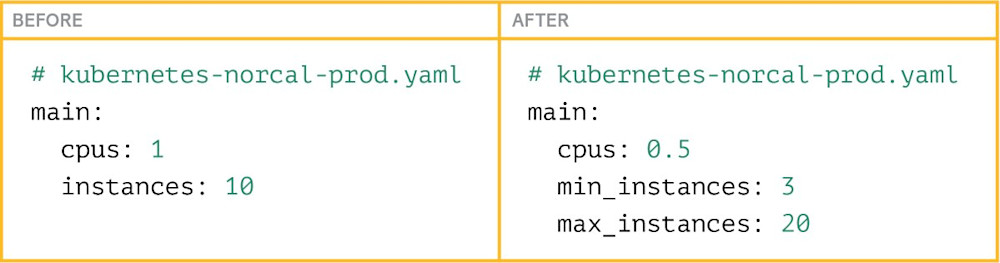

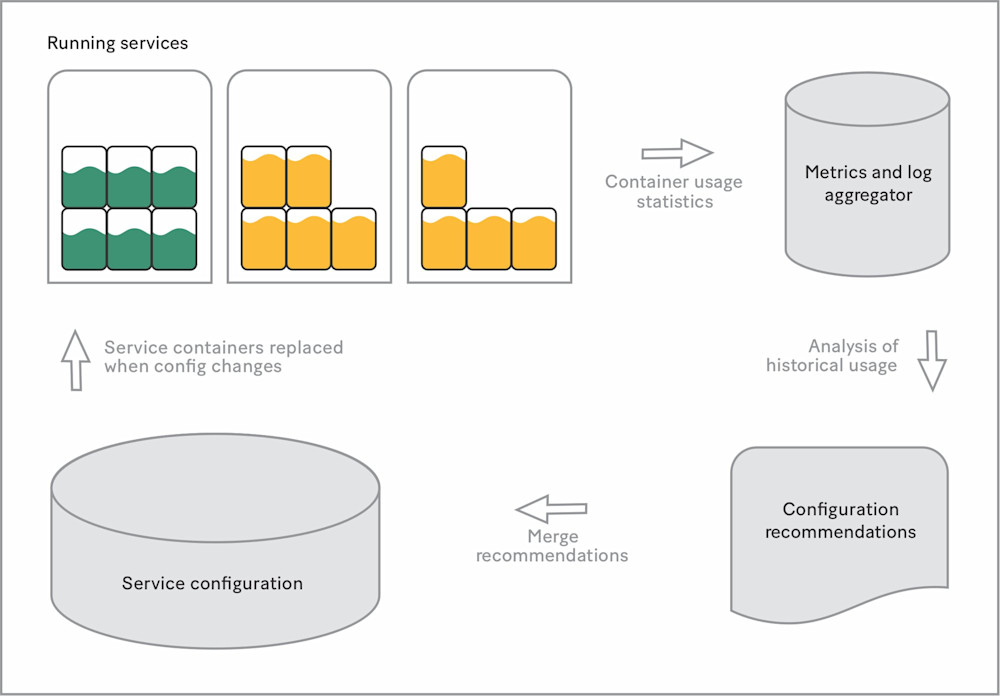

We needed to help our developers find the right settings for their services, so we invested in tooling to monitor, recommend, and even abstract away autoscaling settings and resource requirements for all production services. We now analyze services’ resource usage and generate optimized settings for CPU, memory, disk, and so on. Developers can opt out if they want to: A manually set value will always have priority over templated safe defaults and optimized defaults.

Generating optimized settings for CPU allows us to ensure that services autoscale correctly and have enough capacity for failover. We also reap reliability benefits. For example, if a service hits its memory limit and is killed, its optimized defaults will automatically be updated within two hours with a higher memory allocation. It now takes us less time to fix this particular issue than it took us to diagnose it in the days before autotuning.

Autotuned defaults for all

After offering optimized settings as opt-ins for a few weeks and building tooling to monitor the system’s success metrics, we felt confident shifting from a recommendation system to optimized values enabled by default. We call this “autotuned defaults.” Unless it’s specifically opted out, every service now automatically declares and uses an optimal amount of resources. A side effect of this change is a significant reduction in compute cost due to the rightsizing of previously oversized services. Best of all, developers enjoy simpler service configurations.

Setting ourselves up for success

Properly allocating the extra capacity required for an emergency failover means we always have the compute capacity we need. Automating service resource allocation removes a considerable burden for service owners and improves the process as a whole. In combination, these strategies simplify incident response and reduce downtime during the most serious outages.

One of the most interesting aspects of our failover and autoscaling strategy is organizational. By including the extra margin for failover inside every container, multiple teams have become more efficient. The production engineering team now has control over all service configurations, which is a prerequisite for a successful failover. The compute infrastructure team can focus on enhancing the platform without worrying too much about its ability to handle a failover. And developers don’t need to go through the time-consuming process of tuning the resource allocation or autoscaling configuration for their services. Instead, they can focus on what they do best: building a better product for our users and community.