We all rely on different signals in an office to understand the state of the team and identify warning signs that something may require our attention—or intervention. Because we approach communication with the same goals (to express something or understand something) but different methods, it’s critical to understand that a shift to remote for any team means our methods are likely to diverge further, and some methods might go away completely. For instance, in a remote setting, nonverbal cues—like body language, eye contact, and gestures—may no longer be available. Leaders will need to adopt new tools and ways to gain visibility, drive alignment, and ultimately empower teams to do their best work.

Add engineering metrics to your tool kit

Although metrics can be synonymous with reductive methods to measure performance that routinely cause more harm than good (i.e., counting lines of code to measure engineering productivity), there are ways to deploy them to improve the health and dynamics of a team’s communication styles and skills. While engineering metrics can be intimidating if we’re not thoughtful about them, they can also help drive organizational change when used in context and as a supplement to qualitative data. At their best, metrics offer visibility into what’s happening—but to understand their underpinnings, you and your team need to communicate and collaborate.

In their 2018 book Accelerate, Nicole Forsgren, Jez Humble, and Gene Kim share their insights from four years of research observing the overall performance of over 2,000 engineering teams of different sizes and in different industries to understand how to measure performance and what drives it. They noted that high-performing teams achieve and maintain their pace and stability by tracking and improving on four key metrics: deploy frequency, lead time for changes, median time to recovery (MTTR), and change failure rate. These four key metrics help engineering leaders understand whether their team’s performance is objectively low, medium, or high, based on the book’s framework.

The next step is to consider how to drive positive change within your organization. For that you’ll want to specifically discern your team’s needs, processes, and business goals. When you’re leading a remote team working across many time zones, you’ll often have different considerations compared to co-located teams. For example, the authors of Accelerate suggest that high-performing teams should target an average lead time (time from first commit to code deployed in production) of less than an hour. For an asynchronous team, that specific goal might not be reasonable—or could even be impossible—but that doesn’t mean you can’t (or shouldn’t) use metrics to have better visibility into how you work and measure improvement.

To get started responsibly, determine your team’s objectives and where your organization needs to be heading. During this process you’ll want to collect as much feedback as possible on how your team works together, any current challenges, and areas that need improvement. Utilize retrospectives and 1:1s to understand the pain points your team is experiencing. Take note of common themes that emerge from these conversations.

For instance, say during a 1:1 an engineer expresses frustration at waiting a long time for a code review. Later on, another engineer tells you code review has been challenging because pull requests are too big. As a leader, you can connect the dots and dig deeper into the code review process. Metrics can supplement these conversations and paint a more comprehensive picture. Adding objective data points allows you and your team to better grasp the current state of your processes and have discussions that go beyond gut feel.

Now that we understand that it’s possible to leverage metrics to drive continuous improvement, let’s discuss two tactics you can use to leverage engineering metrics effectively.

Debug your processes

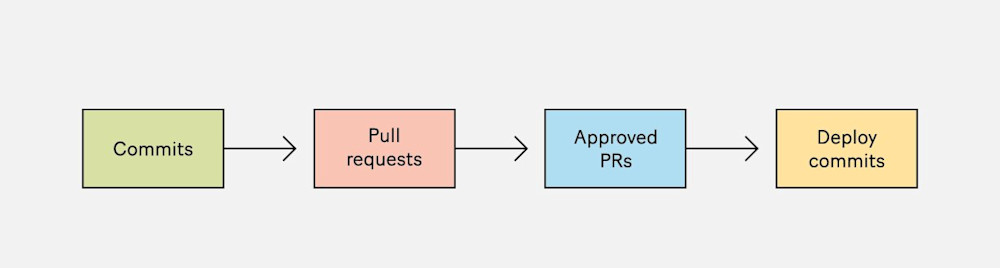

Everyone has their own mental model of how their organization works. For instance, the way your team deploys code changes into production consists of a set of events that follows a certain flow. Either consciously or unconsciously, you use these high-level models to capacity plan, scope and prioritize projects, and more. Take advantage of this model by augmenting it with engineering metrics to understand what’s happening throughout the flow and use it to identify bottlenecks and opportunities for improvement.

Let’s return to our earlier example, in which conversations with your team indicated the code-review process may warrant a closer look. Before diving into a detailed model of your coding process, I recommend starting broad and simple:

Draw the model as a set of discrete events, each of which can be broken down into additional areas you wish to focus on. This model serves as your starting point. It’s a good opportunity to make sure you and your team share an understanding of what the process currently looks like. Once the model is established, you can augment it with engineering metrics:

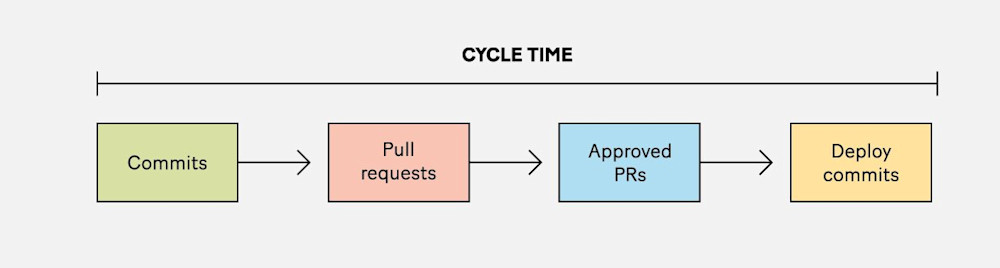

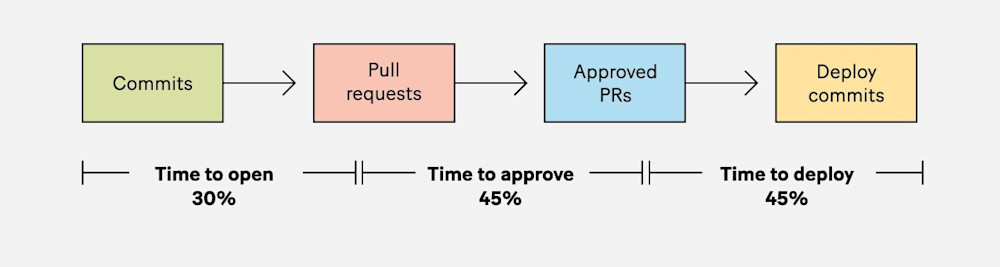

Look at cycle time across your organization, which, as shown in the diagram above, measures the time from first commit to time to deploy. Observing trends over time can help you spot any sudden changes and identify whether the cycle time is generally trending up or holding steady. To better understand the model and decide which specific areas to focus on, break down the cycle time into more granular metrics:

In our example scenario, the quantitative data shows a long “time to approve.” On its own, this metric is not necessarily a good or a bad thing. But since the code review step takes the longest in this process, any improvements you make are likely to have a positive impact on your overall process.

One important advantage of creating mental models with your team is increased transparency, which is of particular importance when working remotely because it allows everyone to have visibility into existing processes and decreases opportunities for misalignment. Documenting your model will also allow you to disseminate information without requiring everyone to be in the same room, and will facilitate asynchronous feedback where team members can add comments or suggestions to the model as needed.

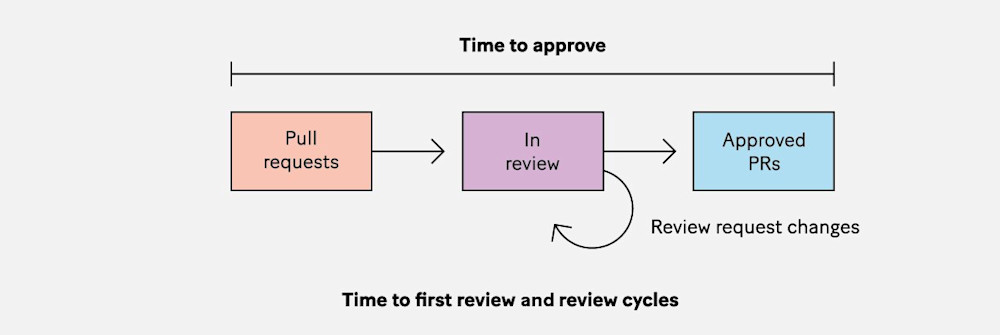

With this augmented model on hand, you can break down the challenging step into further events to better understand what’s happening (or not happening) during code reviews:

Let’s say our team follows a process in which an engineer opens a pull request and marks it ready for review. From there, another engineer either approves it or requests changes. To understand the minimum time a pull request waits for review, we should look into the metric “time to first review.” Because a pull request can go back and forth between the author and reviewers many times before it’s approved, we should also observe the “review cycles” metric.

At this stage, where you’re digging deeper into a particular step of a process, I recommend slicing the metrics into even smaller components. For example, it could be enlightening to break down time to first review and review cycles by team or by area of the system (e.g., frontend versus backend). This will allow you to identify teams that are outperforming on those metrics and work with them to understand their code review habits and best practices. Then, you can work with other teams to implement these best practices across the organization.

The intention behind breaking down metrics by team is expressly not to shame teams that aren’t doing as well. Instead, the purpose is to identify what is working within the organization and determine how it can be scaled so teams work better together. Conversations should be focused on identifying best practices and discussing how teams are going to embrace and adopt them. Going back to our example scenario, a team where everyone is in different time zones and there’s no overlap may have a higher time to first review compared to teams with multiple members in the same or closer time zones. Using metrics can help you better understand the impact of these organizational differences on your workflow.

Outlining existing processes clearly will increase awareness and alignment, which ultimately makes it easier to drive change since everyone on the team has the same level of visibility into current processes. (This becomes even more important when the entire team is remote.) Metrics will bring objective information to conversations and can help paint a fuller picture for everyone when driving change across your organization. While I focused on code review processes here, you can follow this approach to drive conversations around other areas of the development process.

Measure progress toward team improvement goals

Be careful not to confuse metrics with goals. As Goodhart’s law (as summarized by Marilyn Strathern) states: “When a measure becomes a target, it ceases to be a good measure.” In other words, when people know what is being measured, they adapt their behavior, and the results are unlikely to be what was originally intended.

To reduce the risk of this happening, I recommend involving your team when creating strategies for both improving and setting goals. Clear communication with the impacted team from the outset will help everyone understand why certain changes are coming.

When setting team improvement goals, leverage your mental model to identify which improvements could have the greatest impact on the overall process. It might be tempting to try to improve multiple areas at once, but I recommend you start by focusing on one area. That way, it’s easier to manage—especially when it involves multiple teams or the whole engineering organization—and it reduces the innate risk that comes with introducing change.

Let’s return to the code review example we used during the debugging phase. You’ve scheduled a meeting with your team to discuss how you’re going to improve the code review process, and you’ve set a goal you can work toward together. When determining this goal, I recommend agreeing on key terms, such as the estimated due date, how frequently you’ll meet to discuss updates, and how you’re going to measure progress. This will ensure that everyone on the team has clarity not just on the goal, but also on how progress is being tracked.

Deciding how to measure progress toward a goal can be tricky. Since your goal is focused on team improvement, choose a couple of quantifiable indicators that will help signal whether the changes your team is adopting are driving trends in a positive direction. Start by selecting engineering metrics related to the code review process. Try and limit yourself to a few—you don’t want to be overwhelmed with so many metrics to track that you lose sight of what you want to accomplish.

Also, I recommend using percentage targets to measure progress instead of absolute values. For example, a possible target could be “time to first review of fewer than six hours for 95 percent of pull requests,” rather than “time to first review of fewer than six hours for all pull requests.” Note how the former allows for outliers—which are normal and will likely always happen, no matter how good your processes get, and especially in remote or distributed organizations with teams spread out across the globe—while still encouraging your team to follow what you consider best practices to drive improvement.

Depending on your organization, you may be able to use the same indicators to measure progress across teams, or different teams may need separate considerations according to the kind of work they do. It’s best to allow teams to take ownership of the goal and adjust accordingly. This is especially important when working with remote teams, where geographical differences alone may require them to set different targets to better suit their needs.

When those responsible for goals meet to discuss progress, it’s important they feel empowered to share whether the metrics are truly helping or if they should be changed. It’s normal and encouraged to iterate as you learn more. Work with your team to commit to trying changes for a set period of time (at least a couple of months). After that, discuss whether any adjustments to how you’re measuring progress are necessary.

Long live teamwork

Armed with these tactics, remote engineering teams are better equipped to continuously improve how they work together. Having more transparency and visibility using qualitative and quantitative data empowers engineers to make better-informed decisions. At the end of the day, improving your processes and practices not only allows you to strengthen your team skills and develop software more quickly, but also to deliver stronger business outcomes that benefit your company as a whole. Then, it almost doesn’t matter where your team is located or distributed, because you’ve got the tools you need to manage properly from wherever you are.